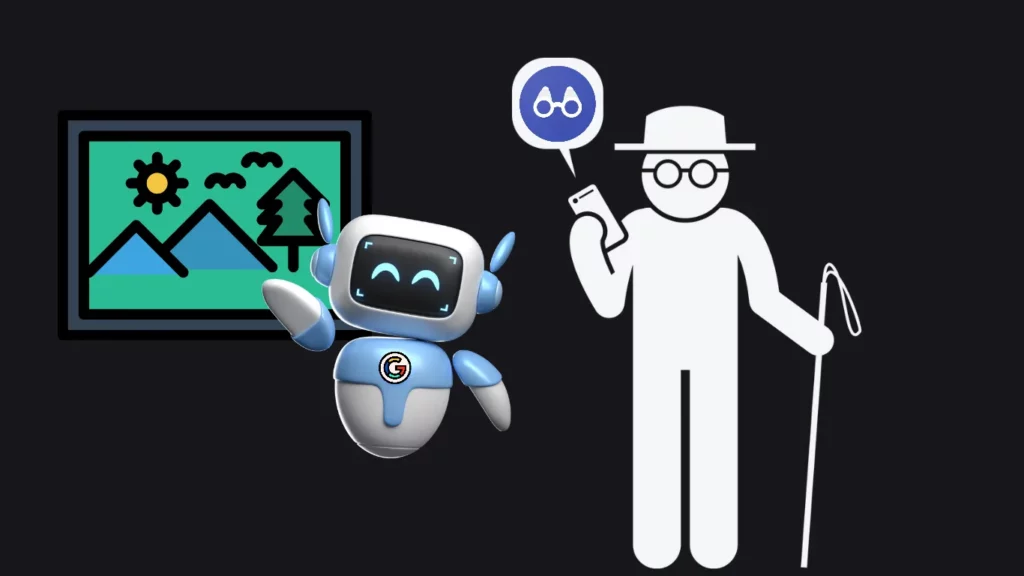

Google’s Lookout has introduced a groundbreaking feature called ‘Image Question and Answer,’ leveraging AI to assist individuals with visual impairments.

This innovation enables users to receive detailed descriptions of images, enhancing their independence and understanding of the world around them.

How can AI help blind and partially-sighted people better perceive the world? ✨

— Google DeepMind (@GoogleDeepMind) February 6, 2024

We explain how our technology powers Image Q&A: a feature in @Google's Lookout app enabling communities to ask questions about images.

Watch the Audio Described version.→ https://t.co/KNqc6KbTWo pic.twitter.com/TLzNo7Ub0o

Before the advent of generative AI, the Google Lookout app was already aiding the visually impaired community.

Launched in 2019, Lookout has evolved to cater to diverse user needs, with Image Q&A marking a significant milestone in inclusivity and accessibility.

With Image Q&A, users can ask about various aspects of an image, from scenic landscapes to text on signs, receiving AI-driven responses that enhance their ability to engage with their surroundings independently.

Google Lookout’s commitment to accessibility extends beyond Image Q&A, with features like Text mode for auditory reading and Food Label mode for identifying packaged foods, reflecting Google’s global commitment to inclusivity.

In a world reliant on visual information, technologies like Google Lookout’s Image Q&A are crucial for individuals with visual impairments.

By bridging the accessibility gap, Google enhances user experiences and fosters a more inclusive society, exemplifying the potential of AI to redefine societal norms for the betterment of all individuals, regardless of their abilities.